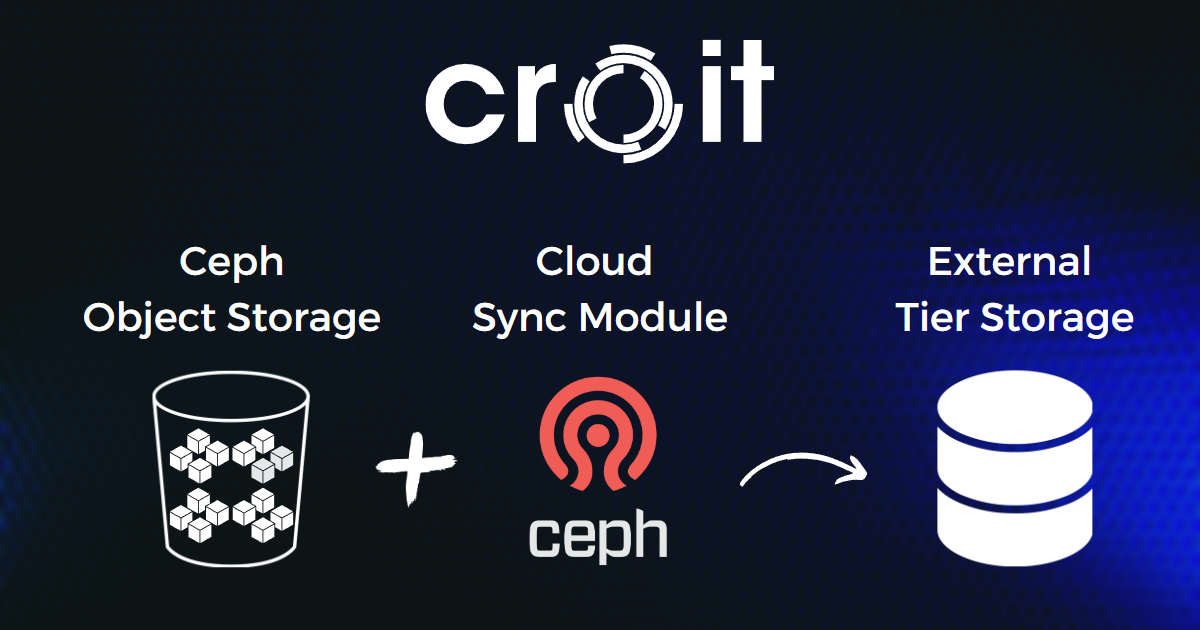

How to Back Up Object Storage Using the Cloud Sync Module

Introduction

Ceph Object Gateway is an object storage interface that provides a RESTful gateway between applications and Ceph Storage Clusters. It uses the Ceph Object Gateway daemon (radosgw), an HTTP server designed for interacting with the Ceph cluster, and provides interfaces that are compatible with both Amazon S3 and OpenStack Swift.

The Ceph Object Gateway can distribute/replicate these objects across different realms, zone groups, and zones, in which dedicated radosgw can be assigned to serve specific purposes. This would unlock use cases such as backing up the object storage to an external cloud cluster or a custom backup solution using tape drives, indexing metadata in ElasticSearch, etc.

Cloud Sync Module

Sync modules are built atop the multisite framework and allow for forwarding data and metadata to a different external tier. A sync module allows for a set of actions to be performed whenever a change in data occurs (metadata ops like bucket or user creation are also regarded as changes in data).

The cloud sync module syncs zone data to a remote cloud service in a unidirectional way (data is not synced back from the remote zone). The goal of this module is to enable syncing data with multiple cloud providers.

The currently supported cloud providers are those that are compatible with AWS (S3).

Requirements and Assumptions

The cloud sync module configuration requires one Ceph storage cluster, two Ceph object gateway instances, and one S3 target.

We’ll use three endpoints:

- http://192.168.112.5:80: The endpoint managed by RGW for the existing Ceph storage cluster

- http://192.168.112.6:80: It will rely on RGW to sync data with another S3 provider

- http://192.168.105.5:80: The S3 target we’ll use to push/sync data

This configuration requires a master zone group and a master zone. All gateways will retrieve their configuration from a radosgw daemon on a host within the master zone group and master zone.

Prepare the zones

A realm contains the configuration of zone groups and zones and also serves to enforce a globally unique namespace within the realm.

Create a new realm by opening a command line interface on a host identified to serve in the master zone group and zone. Then, execute the following:

radosgw-admin realm create --rgw-realm=movies --defaultA realm must have at least one zone group, which will serve as the master zone group for the realm.

Create a new master zone group by running the following:

radosgw-admin zonegroup create --rgw-zonegroup=us --rgw-realm=movies --master --defaultA zone group must have at least one zone, which will serve as the master zone for the zone group.

Create a new master zone by running the following

radosgw-admin zone create --rgw-zonegroup=us --rgw-zone=us-east --endpoints=http://172.31.124.5:80 --master --defaultTo synchronize the data with the external provider, a last zone is necessary that will serve as a bridge between the cluster and the cloud:

radosgw-admin zone create --rgw-zonegroup=us --rgw-zone=sync --endpoints=http://172.31.124.6:80 --tier-type=cloudCreate a system user

The radosgw daemons must authenticate before pulling realm and period information. In the master zone, create a system user to facilitate authentication between daemons:

radosgw-admin user create --uid="synchronization-user" --display-name="Synchronization User" --systemMake a note of the access_key and secret_key, as the sync zone will require them to authenticate with the master zone. Add the system user to both zone

s with:

radosgw-admin zone modify --rgw-zone={zone-name} --access-key={access-key} --secret={secret}S3 configuration

In order to synchronize and send the data to the external provider, it is necessary to get and define the endpoints and the S3 user credentials in the sync zone:

radosgw-admin zone modify --rgw-zonegroup=us --rgw-zone=sync --tier-config=connection.endpoint=http://192.168.105.5:80,connection.access_key={access-key},connection.secret={secret}If any of your keys starts with a number, you will be unable to configure it.

Update the period

After updating both zones' configuration, update the period:

radosgw-admin period update --commitUpdating the period changes the epoch and ensures that other zones will receive the updated configuration.

Radosgw configuration

There must be a radosgw assigned to each of the zones; for this, we will edit the Ceph configuration file and indicate which zone each daemon is in charge of:

[client.rgw.$(hostname)]

host = $(hostname)

rgw zone = us-east

[client.rgw.$(hostname)]

host = $(hostname)

rgw zone = syncOn each of the object gateway hosts, restart the Ceph object gateway service:

systemctl restart ceph-radosgw@rgw.`hostname -s`Checking the sync

At this point, it is possible to create/upload new buckets and objects to the storage cluster (using any S3 client like s3cmd, aws-cli, etc.), and they should immediately be synced with the external cloud.

The synchronization status can be checked at any time as follows:

radosgw-admin sync status --rgw-zone=sync

realm 46669d35-f7ed-4374-8247-2b8f41218109 (movies)

zonegroup 881cf806-f6d2-47a0-b7dc-d65ee87f8ef4 (us)

zone 7ead9532-0938-4698-9b4a-2d84d0d00869 (sync)

metadata sync syncing

full sync: 0/64 shards

incremental sync: 64/64 shards

metadata is caught up with master

data sync source: 303a00f5-f50d-43fd-afee-aa0503926952 (us-east)

syncing

full sync: 0/128 shards

incremental sync: 128/128 shards

data is caught up with sourceConclusion

Sometimes you do not have the adequate infrastructure or enough resources to maintain a multisite (which requires at least two clusters) or you simply prefer to delegate the maintenance of the backup of your objects to an external provider, and that is where this module is an excellent option. The cloud sync module allows you to keep a backup of your Ceph storage cluster with any external provider compatible with S3 in a simple way.