- Getting Started Configuration Accessing Your Cluster Hypervisors Maintenance Hook Scripts Troubleshooting

On this Page:

- What is CephFS?

- Metadata Servers (MDS)

- How Many MDS?

- Hardware Requirements

- Setting mds cache memory limit

- Setting Up MDS

- More Than One Active MDS

- Running Multiple MDS per Server

- Data Placement

- In Croit

- On the mounted Filesystem

- Mounting the Filesystem

- The Native Way

- Accessing via SMB

- Accessing via NFS

CephFS

What is CephFS?

CephFS is a POSIX-compliant file system that can be accessed by multiple clients. It can be mounted directly via the Linux kernel or through FUSE and libcephfs. Metadata (e.g., file names, directory contents, permissions) and data are stored in separate pools. Metadata is managed by an MDS (Metadata Server), while clients connect directly to OSDs to store/retrieve data.

Metadata Servers (MDS)

MDS are essential for setting up CephFS.

How Many MDS?

- Each file system can have multiple active MDS.

- Each active MDS requires a separate passive failover MDS.

- Typically, one active MDS and one standby MDS are sufficient.

- Multiple active MDS are recommended for systems with over 100 million files or a high number of metadata operations.

Hardware Requirements

- MDS are both CPU and memory bound, though it highly depends on the workload.

- Colocating MDS with OSDs or other services means they are competing for resources.

- Make sure the cephfs_metadata pool is backed by the fastest possible OSDs in your cluster. croit will automatically try to place the pool on NVMes.

Setting mds cache memory limit

- Navigate to

Configin the UI. - Click

Add - Search for

mds cache memory limit - The default of

4 GiBis rather low for most workloads.

Important Ceph allows this limit to be overshot by 50% before displaying warnings to allow for usage spikes.

Setting Up MDS

- Navigate to

CephFSin the UI. - You will be prompted to choose servers for your MDS.

- Select at least two servers (1 active + 1 standby).

More Than One Active MDS

By default, only one active MDS is set. To configure more:

- Navigate to

CephFS -> MDS. - Adjust

Max MDS.

Important Changing the number of active MDS might temporarily impact clients.

Running Multiple MDS per Server

We recommend running only one MDS per server. When running multiple MDS per server, you might end up running multiple active or standby-replay MDS on a single server. This can cause downtime for CephFS or slow recovery should that server go down.

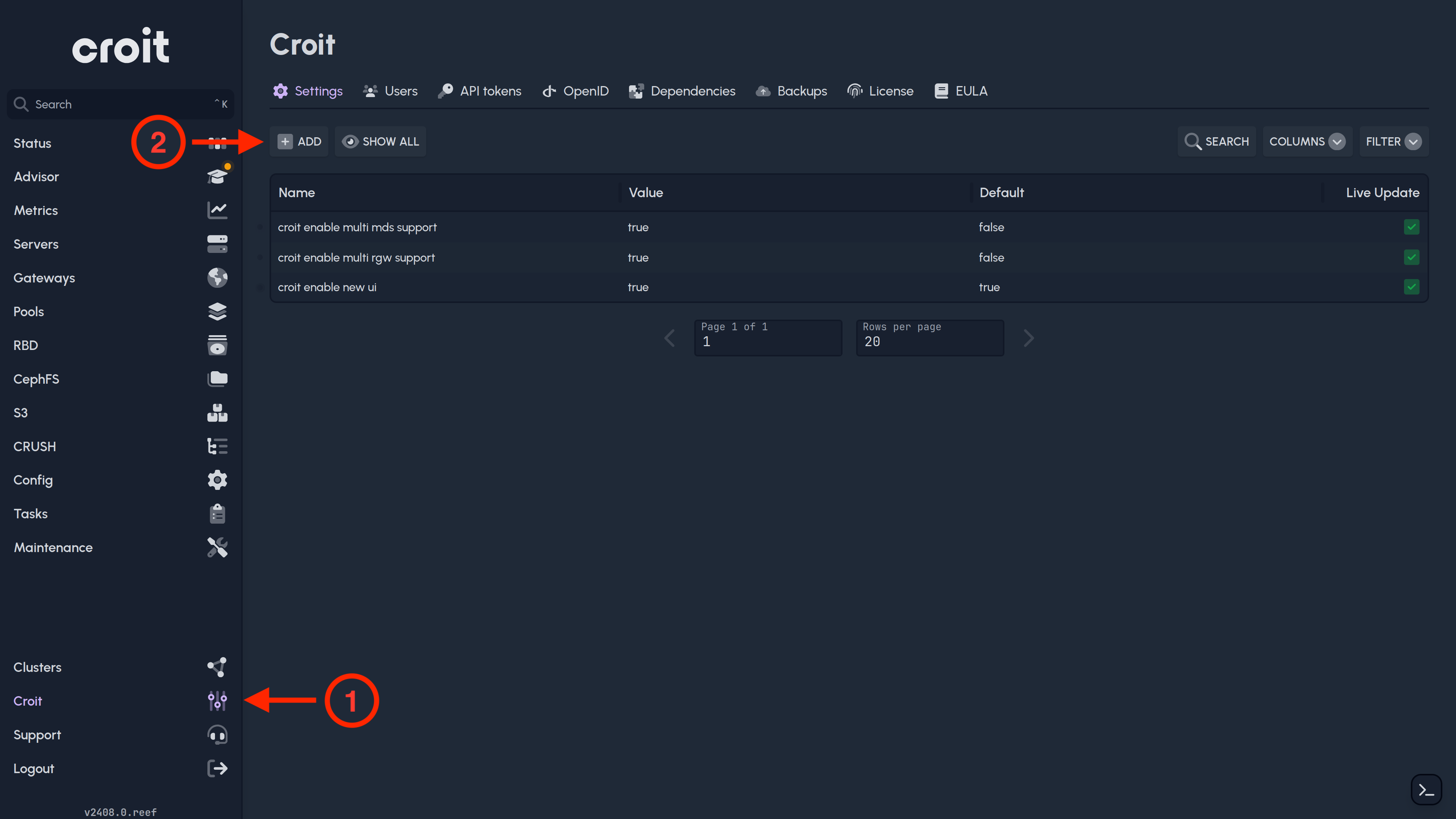

If you still want to run multiple MDS per server, you need to first enable this feature in croit:

- Navigate to

Croitin the UI (bottom left) to access the croit settings page. - Click

Addand search forcroit enable multi mds support. - Enable the toggle button, and click on

Save.

The user interface now allows you to add multiple MDS instances on a single server.

Data Placement

To influence how the files are stored you have two options:

In Croit

- Go to

CephFS. - Select a folder and click

Edit->Pool Layout. - You can adjust both the desired destination pool and namespace.

On the mounted Filesystem

You can use filesystem attributes to achieve the same thing on the mounted filesystem.

setfattr -n ceph.dir.layout.pool -v cephfs_data_nvme /mnt/cephfs/my_nvme_pool

Existing files inside the folder aren't moved to the new pool!

Mounting the Filesystem

There are multiple ways to mount the filesystem.

The Native Way

To mount your CephFS on a Linux system you need to:

- Setup Ceph Keys.

- Download and move both ceph.conf and key to

/etc/ceph/on the system. - Ensure the system has an up-to-date kernel, to prevent client bugs.

- Ensure the system has access to your MONs.

mount -t ceph name@.fs_name=/ /mnt/cephfs -o mon_addr=10.0.0.1,10.0.0.2,10.0.0.3

Replace mon_addr with your actual MON addresses.